Introduction

One of my Kotlin lambdas was throwing Too many open files exceptions and thus logging errors, but only nightly, when it got bursts of SQS messages to process.

To find out what was causing these errors, I followed these steps:

- Read up on what can be the causes

- Determine what/where in the code is not closing the file descriptors

- Find a solution to the issue

- Then fix the issue

After reading up, it turned out that it can be actual files not getting closed, but open connections also use file descriptors, so they count for the total number of open File Descriptors (FDs).

I also found out that for AWS Lambda Serverless, the maximum open file descriptors is

fixed to 1024. Normally in Linux systems you can modify that limit e.g with the

ulimit command, but

not in the lambdas execution runtimes. Thus a quick fix of increasing the open files limit wasn't possible.

Important to know too when analyzing this problem is that "

... Lambda doesn't send more than one invocation at a time to the same container. The container is not used for a second invocation until the first one finishes. If a second request arrives while a first one is running, the second one will run in a different container. When Lambda finishes processing the first request, this execution environment can then process additional requests for the same function. In general, each instance of your execution environment can handle at most 10 requests per second. This limit applies to synchronous on-demand functions, as well as functions that use provisioned concurrency. In you're unfamiliar with this limit, you may be confused as to why such functions could experience throttling in certain scenarios." Partially from:

https://docs.aws.amazon.com/lambda/latest/dg/lambda-concurrency.html My addition: note that in the above mentioned 10 requests per second performance, still those 10 requests are handled sequentially!!

Note that no events were lost in the end when the exceptions occurred; AWS lambda recovered by itself by providing the events again from the queue, since these were not processed due to the exception. It also scaled up the number of instances significantly, probably due to the burst and it detecting that messages were not getting processed sufficiently quick.

To the determine the cause of the open file descriptors, I tried several options to find out how many and which files are opened by what:

- Try by using a Java MXBean

- Try the File Leak Detector library

- Try via Linux commands

- Force Garbage Collections

- Examine the code for potential spots where files and connections are reopened over and over again

Not tried but could be an option to explore: track the network connections created, e.g get the open connections count from OkHttpClient. Something like this: OkHttpClient().newBuilder().build()..dispatcher().runningCallsCount()

Setup

AWS lambda

Kotlin 1.8.10

Java 17

Retrofit2

IntelliJ

Gradle

Java MXBean open files detection

This option only supports showing the amount of open file descriptors. Not which part(s) of the lambda have a given file descriptor in use.

val os: OperatingSystemMXBean = ManagementFactory.getOperatingSystemMXBean()

if (os is UnixOperatingSystemMXBean) {

logger.info("Number of open fd: " + (os as UnixOperatingSystemMXBean).openFileDescriptorCount)

}

Found via:

https://stackoverflow.com/questions/16360720/how-to-find-out-number-of-files-currently-open-by-java-application

Note the call will fail at the

moment the Too many files error starts to happen, because logging and

many other calls require a file descriptor; and the MXBean itself probably too.... So all you can see is that number of open file descriptors increase and increase up to the exception.

File Leak Detector open files detection

I used v1.13 since v1.15 was not available on Maven Central.

First you have to get this library on the command line when starting the Lambda. But after supplying the java agent to the

command line of the AWS lambda like this:

java -javaagent:lib/file-leak-detector.jar

the error during startup was:

Failed to find Premain-Class

manifest attribute in lib/file-leak-detector-1.13.jar

Error occurred during initialization

of VM

agent library failed to init:

instrument

That error shows up because the

MANIFEST.MF

file is missing the Premain-Class entry, which tells the runtime what the

main method is to start the agent.

I tried some other paths to

verify the path was correct; if the path is incorrect you get a

message like “Error opening zip file or JAR manifest missing”.

(note that I already had a -javaagent

argument on the command line for Datadog. Both added caused the

deployment to fail with a timeout; didn't further investigate why, I just

removed that Datadog -javaagent for now)

And indeed when I looked inside the

MANIFEST.MF of the file-leak-detector-v1.13.jar,

no such entry. I then downloaded the source code of the library from Github and noticed another jar file getting created: file-leak-detector-1.16-SNAPSHOT-jar-with-dependencies.jar

(note here I switched to v1.16-snapshot just to have the latest)

And in there, the Premium-Class is set!

Premain-Class: org.kohsuke.file_leak_detector.AgentMain

I then decided to add the new jar

locally to the build of the lambda, for testing purposes, as described here:

https://stackoverflow.com/questions/20700053/how-to-add-local-jar-file-dependency-to-build-gradle-file

The jar was put in the 'libs' directory

which I created in the root directory of the (IntelliJ) Gradle

project. Gradle depencency: implementation files('libs/file-leak-detector-1.16-SNAPSHOT-jar-with-dependencies.jar')

After that, the File Leak Detector

started up fine, as can be seen from these messages:

File leak detector installed

Could not load field socket from

SocketImpl: java.lang.NoSuchFieldException: socket

Could not load field serverSocket

from SocketImpl: java.lang.NoSuchFieldException: serverSocket

Note the last two messages are due to

Java17+ not allowing this anymore, you can find more details about

this when searching for those exact error messages in the File Leak Detector

source code.

I then did have SocketExceptions appear at the nightly runs too like

“Caused by: java.net.SocketException: Too many open files” so I

couldn't tell too much yet. It seems the lib-file-leak-detector is

then not dumping the open files, probably because the above mentioned Java 17+ issue. Or something else went wrong, at least I

couldn't see any dumps in AWS CloudWatch though.

So I set up my own listener from the

library, so I could then dump the open files whenever I wanted. It is possible, but no full example is given; the

*Demo.java examples give some ideas away. Here's what I used:

logger.info("Is leak detector agent installed = " + Listener.isAgentInstalled()")

if (Listener.isAgentInstalled()) {

try {

val b = ByteArrayOutputStream()

Listener.dump(b)

logger.info("The current open files Listener dump = $b")

val currentOpenFiles = Listener.getCurrentOpenFiles()

logger.info("The current open files Listener list size = ${currentOpenFiles.size}")

var jarFilesCounter = 0

currentOpenFiles.forEach {

when (it) {

is Listener.FileRecord -> {

if (!it.file.name.endsWith("jar")) {

logger.info("File named " + it.file + " is opened by thread:" + it.threadName)

} else {

jarFilesCounter++

}

}

else -> logger.info("Found record by Listener is not a file record, skipping")

}

}

logger.info("Of the open files, $jarFilesCounter are .jar files, those were skipped in the logging of the list of files currently open")

b.close()

} catch (ex: Exception) {

logger.error("Dump of current open files failed with exception: ", ex)

}

} else {

logger.info("Leak detector agent is not installed, so not dumping open files")

}

Note I skipped the jars during logging, which I noticed count for a

lot of the open files listed.

The

Listener.dump() lists

all open files, instead of showing how many times a given file is opened. I couldn't find anything mentioning the library does support this; would be a very useful feature.

I noticed the open files count was always lower than when using the MXBean. My guess is that the MXBean

does count the open Socket connections too. And thus is much more precise.

Linux commands open files detection

There are two ways in Kotlin (and Java) to execute a command on the command line:

p = Runtime.getRuntime().exec(command)

and

val pb = ProcessBuilder(command, arguments)

val startedProcess = pb.start()

I tried both ways. My goal was to use the 'lsof' command, but that was not available in the lambda runtime. Then I tried to get the user of the process. And the process ID of the lambda itself. Then via /procs/fd one could find what files are kept open by a give PID.

These commands worked:

val pb = ProcessBuilder("sh", "-c", "echo $$")

val startedProcess = pb.start()

val pb = ProcessBuilder("sh", "-c", "ls -al")

val startedProcess = pb.start()

val pb = ProcessBuilder("sh", "-c", "ls -al /proc")

val startedProcess = pb.start()

p = Runtime.getRuntime().exec("id -u $userName")

These didn't work:

p = Runtime.getRuntime().exec("/proc/self/status")

p = Runtime.getRuntime().exec("echo PID = $$")

p = Runtime.getRuntime().exec("$$")

val pb = ProcessBuilder("echo", "$$")

val pb = ProcessBuilder("$$")

p = Runtime.getRuntime().exec(command) // Exited with value 2, so probably invalid command

When I got the PID, I tried this to get the open FDs by this PID in different ways, but that failed:

val pb = ProcessBuilder("sh", "-c", "ls -al /proc/$pidFound/fd")

After listing all files in the current directory via this command:

val pb = ProcessBuilder("sh", "-c", "ls -al /proc")

I saw that none of the numbers (PIDs) listed there were matching the found PID! At this point I stopped further exploring this option, since then I wouldn't find the open files in /proc/$pidFound/fd anyway....

Force GC

A theory why the Too many open files error is appearing was that the Java runtime doesn't get enough time to clean up (garbage collect) the opened file descriptors. So to test this theory, I forced a Garbage Collect after each 50 invocations of the lambda instance. Of course calling System.gc() doesn't fully guarantee it will happen right at that moment, e.g when the runtime is too busy it will happen later.

To cater for that I also added a Thread.sleep() call. Yes this solution normally is a potential performance killer, but an option to verify the theory. This is the code I used:

nrOfInvocationsOfThisLambdaInstance++

if (nrOfInvocationsOfThisLambdaInstance % 50L == 0L) {

logger.info("$nrOfInvocationsOfThisLambdaInstance % 50 == 0, so going to garbage collect")

try {

System.gc()

} catch (e: Throwable) {

logger.error("Unable to garbage collect due to: ${e.message}. Full details in the exception.", e)

}

logger.info("$nrOfInvocationsOfThisLambdaInstance % 50 == 0, so going to runFinalization")

try {

System.runFinalization()

} catch (e: Throwable) {

logger.error("Unable to runFinalization due to: ${e.message}. Full details in the exception.", e)

}

logger.info("Going to sleep() so it hopefully gets time to GC...")

try {

Thread.sleep(5000)

} catch (i: InterruptedException) {

// ignore

}

}

And indeed, the Too many open files error was gone! But this couldn't really be the final acceptable solution of course.

Examine the code for keeping files open

See below.

Solution

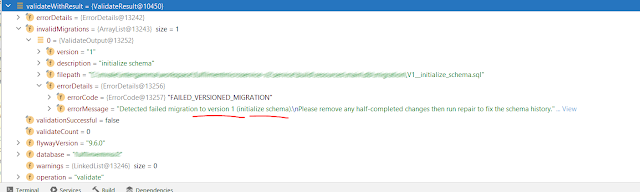

So at this point I only had some idea of how many open files there were at a certain point. I saw the number using the MXBean solution go up to about 1023 and then the Too many open files error started to appear.

In the code I did find a spot where it was opening and reading a configuration file on each incoming request! After moving that code into an object class (or init{} block, or val variable at class level), the Too many open files error started to appear already much later (as in: the number of open files count went up much slower and the exception occurred less).

So I was moving into the right direction! Also, the errors were all SocketExceptions now. After investigating the code some more and more, I noticed the OkHttpClient was getting created each time an HTTP request to an external third party was made (which is relatively slow of course). After also moving this part into an object class, the error was completely gone!

Conclusion: the tools gave some more insights on what was going on, and I learned quite few things on how/where/when file descriptors are used in lambdas, but in the end the problem was found during plain old code examination :)

Digg

Digg